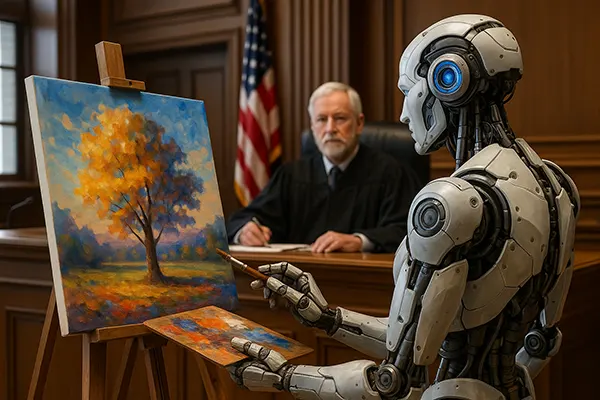

AI Artists and Copyright: Lawsuits Against Neural Networks in 2025

The year 2025 has brought a surge of legal actions and regulatory responses surrounding the use of artificial intelligence in creative domains. With AI-generated images, music, and literary works reaching unprecedented levels of sophistication, questions of ownership, intellectual property, and ethical authorship are being tackled in courtrooms across the globe. This article explores the key legal cases against AI art tools in 2025, analyses their implications, and outlines how governments and creators are responding to the emerging challenges.

Major Lawsuits in the First Half of 2025

Between January and June 2025, at least four high-profile lawsuits were filed in the United States and Europe against companies behind AI art generators. Plaintiffs included groups of illustrators, writers, and independent game designers who argued that their copyrighted works were unlawfully used to train generative models. One major case, Smith v. Genimagine Inc., is being closely followed after it revealed that over 15,000 artworks were scraped from protected art databases without consent.

In Germany, a group of digital artists filed a class-action lawsuit against a startup using their portfolios for AI model training. The plaintiffs presented forensic analysis showing stylistic mimicry and dataset overlaps. While the case is still pending, it has already influenced discussions on the European Union’s AI Act and potential amendments regarding data provenance and artistic ownership.

Meanwhile, in California, the nonprofit Copyright Creators Alliance is challenging several large AI platforms for failing to comply with the DMCA takedown process. Their claim argues that AI-generated works often reproduce substantial portions of copyrighted material, making the issue not about “inspiration” but direct duplication.

Legal Precedents and Regulatory Trends

Judicial trends in 2025 are gradually shifting toward stricter scrutiny of training datasets and the transparency of AI models. While early decisions in 2023 and 2024 leaned in favour of tech companies citing “fair use,” recent rulings suggest a pivot. Courts are beginning to recognise that when commercial value is derived from datasets compiled without permission, it may breach intellectual property rights.

In the UK, the Intellectual Property Office issued a new framework in May 2025, stating that “derivative AI-generated content should be disclosed with documented data lineage.” This move encourages companies to log the datasets used and provide mechanisms for artists to opt out. The regulation is not yet enforceable but signals a move toward standardising transparency obligations.

Moreover, regulators in Canada and South Korea are now considering legislation that would categorically restrict training on protected content unless explicit permission is granted by rights holders. These proposed changes may become a model for other jurisdictions seeking balance between innovation and creative rights.

Artists’ Advocacy and Collective Action

In response to AI exploitation, artists have become increasingly organised in 2025. Several international coalitions such as the Creative Rights Front and the Visual Authors Network have formed to lobby for protective legislation and litigation funding. They have collectively called for the implementation of mandatory dataset disclosures and compensation mechanisms similar to music streaming royalties.

One notable success came in April 2025 when a French court upheld a claim by a graphic novelist whose work had been repurposed into thousands of AI-generated comic strips. The ruling ordered the AI firm to cease distribution and pay €280,000 in damages. The case was hailed as a turning point and has encouraged more artists to pursue legal recourse.

Beyond litigation, creative communities are increasingly adopting blockchain-based watermarks and metadata tagging tools to identify use of their works in AI datasets. These technological defences, while not foolproof, are becoming more advanced and integrated into digital publishing workflows, helping to establish traceability in disputes.

Public Opinion and Creator Solidarity

Surveys conducted in mid-2025 show that a majority of creators and general users support stronger legal frameworks for AI-generated art. According to the Global Creator Trust Index, 72% of professional artists believe AI systems should require explicit permission to use existing artworks as training material.

This public sentiment has fuelled broader solidarity campaigns. In Japan, over 500 manga artists joined a one-day “data strike,” refusing to upload new works to online platforms without clear licensing terms. Similar protests occurred in Brazil and Italy, with support from musicians and voice actors concerned about AI impersonation.

While not all protests have led to immediate results, the growing public discourse has influenced government hearings and policy drafts. Cultural ministries in at least five countries have since initiated consultations with artist associations on AI ethics and rights management protocols.

The Future of Copyright in the Age of Generative AI

As the legal environment continues to evolve, one of the most contentious debates is whether AI-generated works can or should be copyrighted at all. In the US, the Copyright Office reaffirmed in June 2025 that copyright protection applies only to works created by humans. This stance aligns with similar decisions in Australia and India but contrasts with China’s more permissive approach, where AI-assisted creations may be eligible under specific conditions.

Legal scholars argue that while full protection for AI outputs may not be appropriate, a new category of “AI-assisted authorship” could emerge. This would acknowledge the human role in prompting or refining generative outputs, especially when substantial creative input is required. Such recognition could provide a middle ground between creators and technologists.

Moreover, international organisations such as WIPO are actively exploring treaties or model laws to harmonise AI-related IP rights. With input from artists, tech companies, and legal experts, these frameworks may set global standards on attribution, royalties, and dataset ethics in the near future.

Conclusion of the Legal Shift

By mid-2025, it has become clear that AI-generated content is no longer a grey area, but a central issue in global copyright law. Artists are no longer passive observers but are shaping the future of their rights through courts, codes, and coalitions. The rapid development of legal tools and regulatory principles reflects a shared recognition: creativity—whether human or machine-assisted—must exist within an ethical and legally grounded ecosystem.

While the path to full legislative clarity may still take years, the foundational battles are being fought now. The cases and trends of 2025 serve as both cautionary tales and building blocks for a new era of digital authorship. Stakeholders across the creative and legal spheres are called upon to participate, regulate, and innovate responsibly.

Ultimately, the AI and copyright landscape in 2025 reveals a critical truth: technology may evolve rapidly, but the protection of human creativity remains a deeply rooted societal value.